EARS Dataset

An Anechoic Fullband Speech Dataset Benchmarked for Speech Enhancement and Dereverberation

EARS: An Anechoic Fullband Speech Dataset Benchmarked for Speech Enhancement and Dereverberation

Julius Richter, Yi-Chiao Wu, Steven Krenn, Simon Welker, Bunlong Lay, Shinji Watanabe, Alexander Richard, Timo Gerkmann

Abstract

We release the EARS (Expressive Anechoic Recordings of Speech) dataset, a high-quality speech dataset comprising 107 speakers from diverse backgrounds, totalling in more than 100 hours of clean, anechoic speech data. The dataset covers a large range of different speaking styles, including emotional speech, different reading styles, non-verbal sounds, and conversational freeform speech. We benchmark various methods for speech enhancement and dereverberation on the dataset and evaluate their performance through a set of instrumental metrics. In addition, we conduct a listening test with 20 participants for the speech enhancement task, where a generative method is preferred. We introduce a blind test set that allows for automatic online evaluation of uploaded data. Dataset download links and automatic evaluation server can be found online.

EARS Dataset

The EARS dataset is characterized by its scale, diversity, and high recording quality. In Table 1, we list characteristics of the EARS dataset in comparison to other speech datasets.

| hours | speakers | sample rate | |

|---|---|---|---|

| DNS (LibriVox) | 556 | 1948 | 48 kHz† |

| LibriSpeech | 982 | 2484 | 16 kHz |

| LJSpeech | 24 | 1 | 22.05 kHz |

| TIMIT | 5 | 632 | 16 kHz |

| VCTK | 44 | 110 | 48 kHz |

| WSJ0 | 29 | 119 | 16 kHz |

| EARS (ours) | 100 | 107 | 48 kHz |

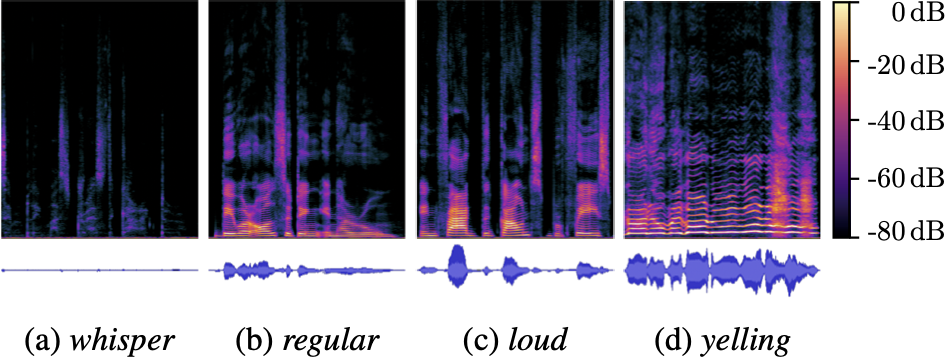

EARS contains 100 h of anechoic speech recordings at 48 kHz from over 100 English speakers with high demographic diversity. The dataset spans the full range of human speech, including reading tasks in seven different reading styles, emotional reading and freeform speech in 22 different emotions, conversational speech, and non-verbal sounds like laughter or coughing. Reading tasks feature seven styles (regular, loud, whisper, fast, slow, high pitch, and low pitch). Additionally, the dataset features unconstrained freeform speech and speech in 22 different emotional styles. We provide transcriptions of the reading portion and meta-data of the speakers (gender, age, race, first language).

Audio Examples

Here we present a few audio examples from the EARS dataset.

p002/emo_adoration_sentences.wav

p008/emo_contentment_sentences.wav

p010/emo_cuteness_sentences.wav

p011/emo_anger_sentences.wav

p012/rainbow_05_whisper.wav

p014/rainbow_04_loud.wav

p016/rainbow_03_regular.wav

p017/rainbow_08_fast.wav

p018/vegetative_eating.wav

p019/vegetative_yawning.wav

p020/freeform_speech_01.wav

Benchmarks

The EARS dataset enables various speech processing tasks to be evaluated in a controlled and comparable way. Here, we present benchmarks for speech enhancement and dereverberation tasks.

EARS-WHAM

For the task of speech enhancement, we construct the EARS-WHAM dataset, which mixes speech from the EARS dataset with real noise recordings from the WHAM! dataset . More details can be found in the paper.

Results

| POLQA | SI-SDR | PESQ | ESTOI | DNSMOS | |

|---|---|---|---|---|---|

| Noisy | 1.71 | 5.98 | 1.24 | 0.49 | 2.74 |

| Conv-TasNet | 2.73 | 16.93 | 2.31 | 0.70 | 3.47 |

| CDiffuSE | 1.81 | 8.35 | 1.60 | 0.53 | 2.87 |

| Demucs | 2.97 | 16.92 | 2.37 | 0.71 | 3.66 |

| SGMSE+ | 3.40 | 16.78 | 2.50 | 0.73 | 3.88 |

Audio Examples

Here we present audio examples for the speech enhancement task. Below we show the noisy input, processed files for Conv-TasNet , CDiffuSE , Demucs , SGMSE+ , and the clean ground truth.

Select an audio file:

Noisy:

Conv-TasNet :

CDiffuSE :

Demucs :

SGMSE+ :

Clean:

Blind test set

We create a blind test set for which we only publish the noisy audio files but not the clean ground truth. It contains 743 files (2 h) from six speakers (3 male, 3 female) that are not part of the EARS dataset and noise especially recorded for this test set.

Results

| POLQA | SI-SDR | PESQ | ESTOI | DNSMOS | |

|---|---|---|---|---|---|

| Noisy | 1.81 | 6.48 | 1.28 | 0.57 | 2.79 |

| Conv-TasNet | 2.68 | 16.56 | 2.41 | 0.75 | 3.43 |

| CDiffuSE | 1.93 | 8.22 | 1.64 | 0.59 | 2.92 |

| Demucs | 3.03 | 16.81 | 2.50 | 0.76 | 3.62 |

| SGMSE+ | 3.35 | 16.43 | 2.59 | 0.78 | 3.79 |

Audio Examples

Here we present audio examples for the blind test set. Below we show the noisy input, processed files for Conv-TasNet , CDiffuSE , Demucs , and SGMSE+ .

Select an audio file:

Noisy:

Conv-TasNet :

CDiffuSE :

Demucs :

SGMSE+ :

Evaluation on real-world data

This demo showcases the denoising capabilities of SGMSE+ trained using the EARS-WHAM dataset. The red frame represents the noisy input audio, while the green frame indicates the enhanced, noise-reduced output.

Dereverberation (EARS-Reverb)

For the task of dereverberation, we use real recorded room impulse responses (RIRs) from multiple public datasets . We generate reverberant speech by convolving the clean speech with the RIR. More details can be found in the paper.

Results

| POLQA | SI-SDR | PESQ | ESTOI | MOS Reverb | |

|---|---|---|---|---|---|

| Reverberant | 1.75 | -16.17 | 1.48 | 0.52 | 2.99 |

| SGMSE+ | 3.61 | 5.79 | 3.03 | 0.85 | 4.73 |

Audio Examples

Here we present audio examples for the dereverberation task. Below we show the reverberant input, processed files for SGMSE+ , and the clean ground truth.

Select an audio file:

Reverberant:

SGMSE+ :

Clean:

Citation

If you use the dataset or any derivative of it, please cite our paper:

@inproceedings{richter2024ears,

title={{EARS}: An Anechoic Fullband Speech Dataset Benchmarked for Speech Enhancement and Dereverberation},

author={Julius Richter and Yi-Chiao Wu and Steven Krenn and Simon Welker and Bunlong Lay and Shinjii Watanabe and Alexander Richard and Timo Gerkmann},

booktitle={ISCA Interspeech},

pages={4873--4877},

year={2024}

}